In 2018, AI is already making life changing decisions, like giving out health advice or helping secure a loan. It can even help people find a job; recruitment firm PeopleStrong has launched AltRecruit, which uses AI’s ability to sift through data without bias, to match people to the perfect job. But are machines always impartial? As part of an ongoing effort to connect our members with thought leaders from our network, we invited some of the best creative minds to a Breakfast Briefing, where tech philosopher Tom Chatfield explored the insights into how we can rid AI of bias.

According to writer and tech philosopher Tom Chatfield, as AI’s capabilities grow, programmers must take responsibility for the data they use to fuel machine learning. That means addressing prejudices and biases before accidentally passing them on to an evolving tech system. “An algorithm is not evil,” he says. In his book, Critical Thinking: Your Guide to Effective Argument, Successful Analysis and Independent Study, he further explains: “Algorithms can swallow and regurgitate any biases contained in the original data…The inscrutability of most machine learning processes can make this process difficult to either critique or reverse engineer, unless you have expert understanding of the original data.”

The solution, Chatfield offers, is to ‘unpick’ the way we present the source data that is programmed into an AI system. This means rethinking what we define as successful, disbarring certain indices such as age, ethnicity, or origin. “If we’re looking for a candidate for a technical job, we can take the dirty bits of the data – names, ages, gender, postcodes, or early education – and we can wipe that out,” he says. “Suddenly, we have an algorithm that is as unbiased as possible. We can do this as we are aware of such biases, whereas a computer is not. It sounds very miserable, but the algorithms are just doing what we tell them.”

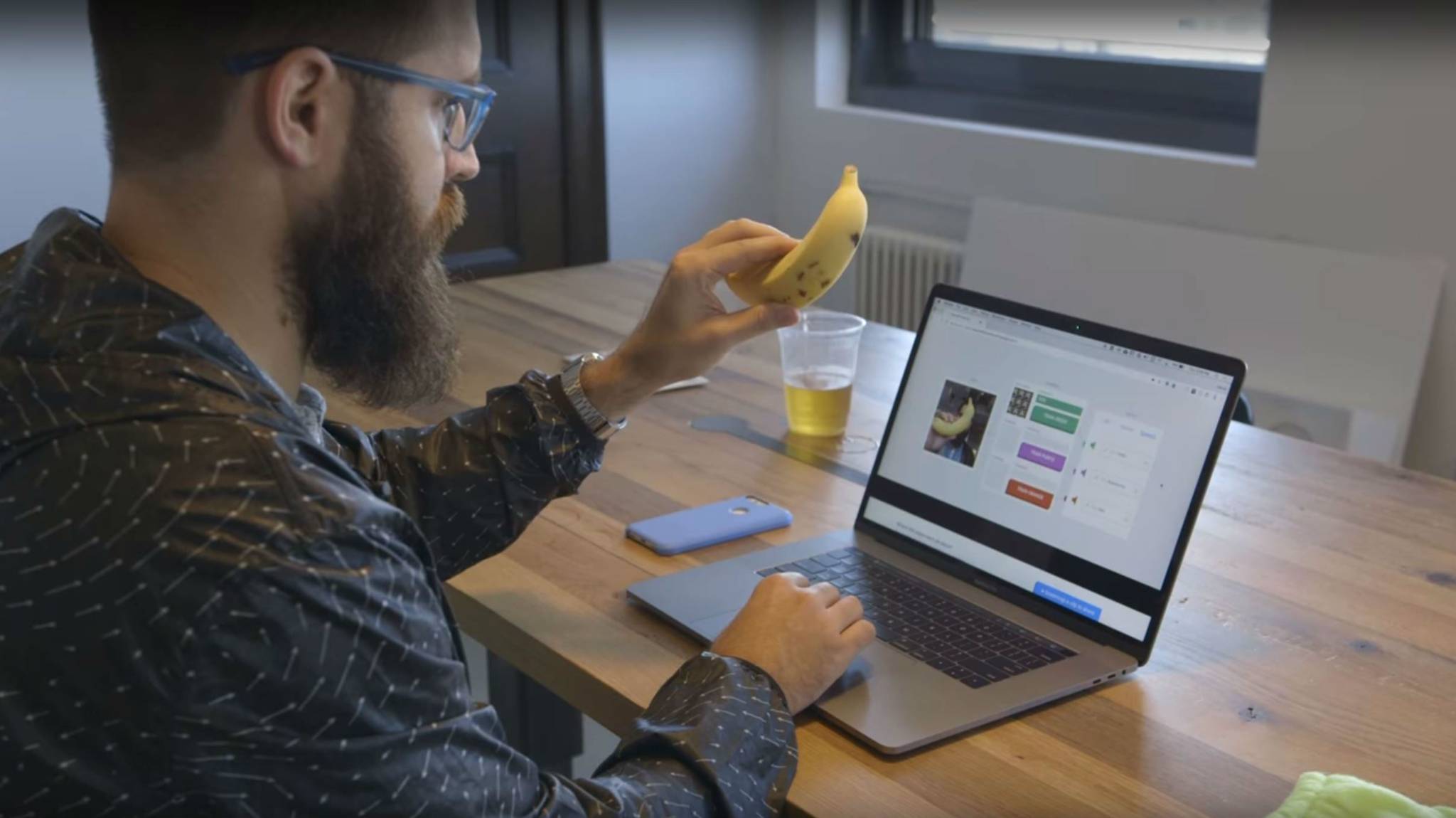

On the whole, people are optimistic about the future; 67% of people globally believe technology will make the world a better place, while 76% feel comfortable using new tech. Even AI is being normalised and demystified thanks to the efforts of major tech firms; Google’s Teachable Machine is a consumer tool people can use to familiarise themselves with machine learning by way of a picture association game. Users feed it stimuli through a microphone and camera – from showing it fruit to using various hand gestures – which it learns to associate with a cache of images and sounds over time.

“We’ve gotten to the stage when companies, governments and others have just about stopped going ‘Technology, wow – we are going to solve the world,’” says Chatfield. “People are now getting to the stage where they say ‘Okay, we use this all the time. What kind of rules and guidelines might stop us completing destroying ourselves.’” AI isn’t sentient in a real sense, so the insecurities around intelligent technology are purely in the eye of the beholder. By scrutinising our own values, we’ll find issues that skews data at its source,” says Chatfield. Doing so grants us an opportunity to start a conversation about a better future, one where human and tech work together for the betterment of everyday life.

Tom Chatfield is a British writer, broadcaster and tech philosopher. He's interested in improving our experiences of digital technology and better understanding its use through critical thought. He’s penned six books exploring digital culture, including Critical Thinking, which was released in October 2017.

Matt McEvoy is the deputy editor at Canvas8, which specialises in behavioural insights and consumer research. In a former life, he was a journalist working in the sports, music and lifestyle fields.